While generative artificial intelligence tools like ChatGPT or DALL-E are making a splash, they come with a degree of risk for companies like Freeport.

That’s because any data uploaded into these tools to create text, images, audio or video could be shared with other users, including competitors and cyber criminals.

“Generative AI has gained popularity, but there’s still a lot for us to learn about its safe and responsible use,” said James Costello, Chief Security Information Officer. “We’re on that journey now and working with industry partners to find ways to leverage these tools while protecting our company and employee data.”

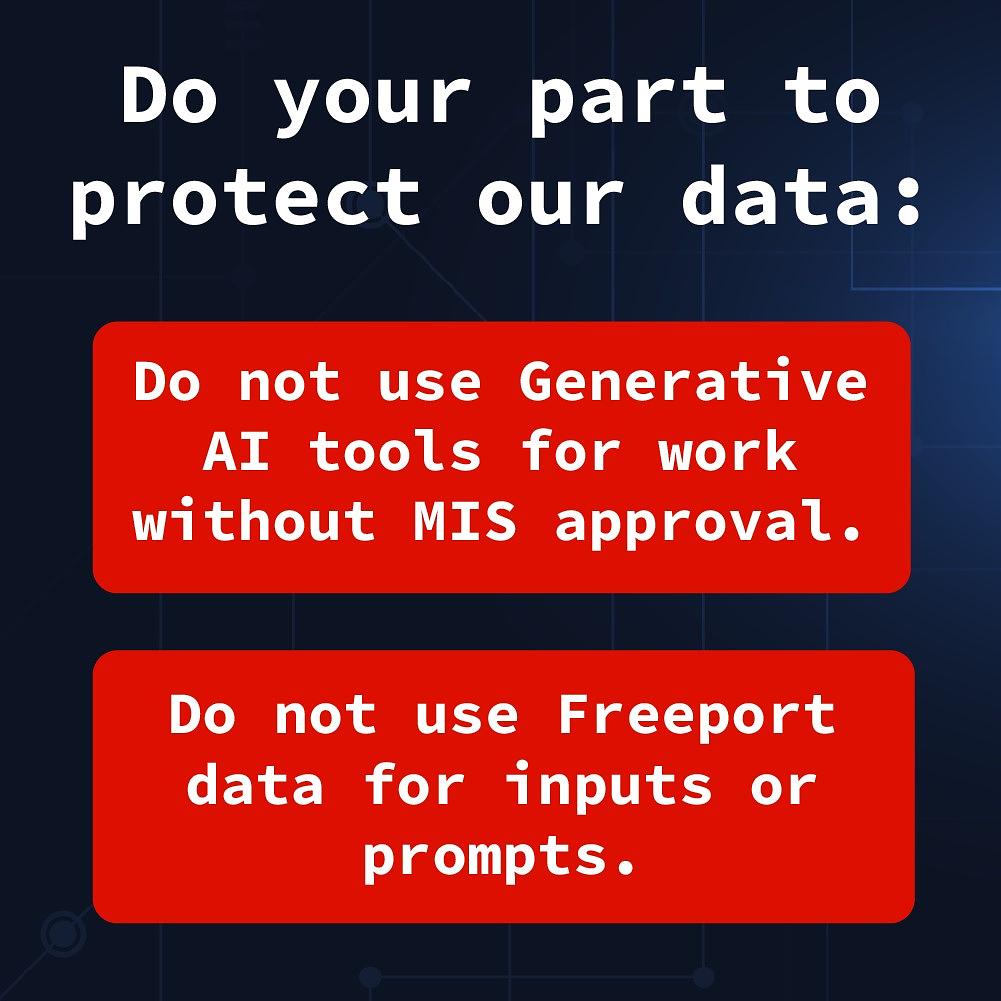

The use of generative AI tools falls under MIS End User Policies that prohibit Freeport-McMoRan employees, contractors and partners from sharing company data with external tools, websites or other parties without the company’s consent.

The use of generative AI tools falls under MIS End User Policies that prohibit Freeport-McMoRan employees, contractors and partners from sharing company data with external tools, websites or other parties without the company’s consent.

Employees should contact MIS for written approval before using generative AI tools for work and refrain from entering company data or information for inputs or prompts. Those who have opened a generative AI account linked to their company email should close it immediately and contact MIS.

If you have questions about the MIS End User Policies or generative AI, please email the MIS Cyber Security team. Follow MIS News on Yammer to stay connected with the latest developments and learn more with these frequently asked questions:

What is generative AI?

These software tools can create text, images, audio or video by using basic prompts. While generative AI has existed for many years, it became mainstream with the November release of ChatGPT—now the most widely adopted app in history. Many tech companies have since released competing products.

How does generative AI work?

The tools study millions of data points submitted by users to create something new. For example, when asked for an essay on the history of mining, ChatGPT analyzes thousands of articles, books and other media on the subject and writes a new paper. Other generative AI tools, such as DALL-E, can do the same thing with images.

What are the risks of generative AI?

Generative AI has the potential to transform the way companies do business, but comes with immediate risk. When someone uploads data or enters a prompt into the tool, it’s added to the dataset. The data then may be used in searches when others are interacting with the tool. When data is added to the tool’s dataset, it is added with attribution and can be traced back to its source, posing a privacy risk.

Knowing this, cyber criminals are using these tools to try and capture potentially sensitive information. For example, a Freeport employee who enters mine operations data into ChatGPT or a similar tool might be looking to create a quick visualization of that data. But when the company data is uploaded to the internet, anyone can potentially access it. To complicate matters, many of these tools remain secretive about how data is stored or shared to maintain a competitive edge.